Although the 2018 Gartner hype cycle places deep neural networks (DNN) and ML (DNN being a subset of ML) near the ‘Peak of inflated expectations’, there is now an incredible engagement by many fields of scientific and engineering academia and industry with ML, so the transition to productive use may be fairly rapid. I briefly consider applications to the seismic life cycle before focusing upon automated velocity model building (VMB).

- Full Waveform Inversion (FWI)

- PGS hyperBeam

- PGS hyperTomo

- Velocity Model Uncertainty

Introduction to Artificial Intelligence

The traditional definition for ML given is that it is a field of study that gives computers the ability to learn without being explicitly programmed. An alternate phrasing may be that it is a field of study that gives computers the ability to be implicitly programmed using high levels of abstraction in how the code is built. A common theme at the 2018 SEG conference in Anaheim was that ML can play a role where the physics is not understood or economically tractable. Although the physics may not be understood, ML derives mathematical models that can extract knowledge and patterns from data; most commonly using various forms of neural networks. ML includes supervised learning (when you know the question you are trying to ask and you have examples of it being asked and answered correctly), unsupervised learning (you don’t have answers and may not fully know the questions), and reinforcement learning (trial and error solving).

3D seismic surveys acquire many thousands of highly redundant and overlapping snapshots of the subsurface (shot gathers). This redundancy can be exploited in many emerging ML applications to the entire seismic life cycle. From a seismic interpreter perspective, many emerging ML applications involve feature detection and classification of stratigraphic and morphological features, automated fault interpretation, and automated quantitative interpretation. From a seismic processing and imaging perspective, obvious applications include noise attenuation, aspects of velocity model building (VMB), signal reconstruction, the optimization of parameters used in seismic imaging. In the remainder of this article, I focus on VMB.

Augmented and Automated Velocity Model Building

An accurate seismic velocity-depth model is the foundation for all multi-channel seismic signal processing and imaging outcomes, and continues to be invaluable for many quantitative interpretation and reservoir modeling pursuits. Refraction and reflection tomography are the most popular VMB methods in the last two decades because although they traditionally involve a high degree of human interaction between each iterative update, the algorithms are computationally efficient. Full Waveform Inversion (FWI) is rapidly increasing in profile, but is computationally demanding, has no guarantee of global convergence, and traditionally benefits from an accurate starting model.

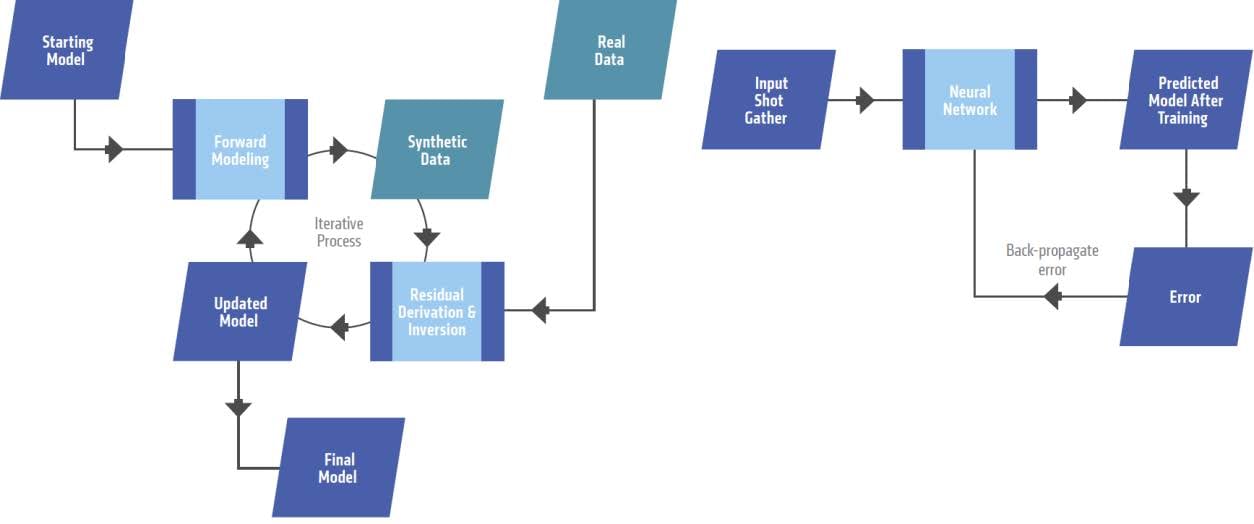

With regards to FWI, although advances in regularization of the gradient term and the use of optimal transport norms in the misfit function have considerably improved FWI convergence stability and avoid cycle skipping, neural network-based solutions are being investigated for extrapolating ultra-low frequency signal as a preconditioner to FWI, to also stabilize FWI convergence, and to estimate velocity models from modelled shot gathers as an alternative to FWI. For example, authors from Shell use DNNs to estimate 2D velocity models from raw shot gathers using thousands of synthetic training models that contain a variety of geological features and faults, and authors from Equinor use a convolutional neural network (CNN) trained on pairs of randomly-generated synthetic velocity models and corresponding synthetic shot gathers produced with acoustic finite difference modeling; ‘similar’ to FWI. When the velocity models for each shot gather estimated using a known reference model are stacked the result imperfectly resembles the background velocity trend.

Pragmatic Automated Velocity Model Building

Stochastic simulation is a simulation that traces the evolution of variables that can change stochastically (randomly) with certain probabilities. Where there is interest in understanding the risk and uncertainty in the prediction and forecasting of models, such as is clearly relevant to automated VMB, Monte Carlo simulations can be used to model the probability of different outcomes that cannot easily be predicted due to the intervention of random variables.

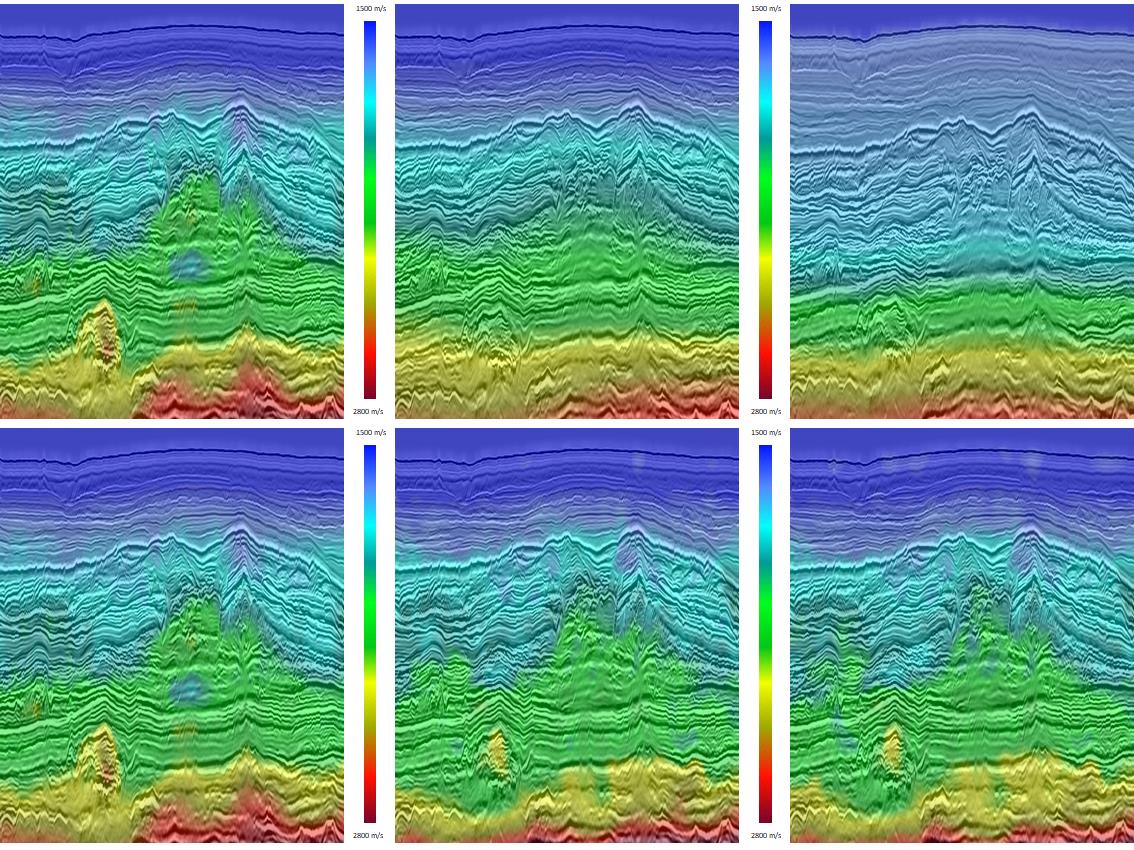

Authors from PGS have demonstrated how a wavelet-shift reflection tomography solution based upon fast beam migration (hyperTomo) can be used in a pseudo-random implementation to generate uncertainty attributes for a final velocity model. On one hand this can be used to augment risk mitigation during seismic imaging, target positioning and the estimation of volumetrics; and on the other hand, the solution can be adapted to estimate accurate velocity models from highly imperfect starting models in a fully automated manner. Figure 2 is from an upcoming PGS publication where final 3D velocity models from two real 3D starting models were computed without any human interaction and compared to the best known final model. The results are remarkably similar in both cases and could be achieved two orders of magnitude faster than traditional manual velocity picking followed by traditional reflection tomography with human interaction between each iteration. A practical benefit of this approach (stochastic modeling as opposed to ML) is that the computational efficiency of hyperTomo to run hundreds or even thousands of model realizations on affordable computer clusters in a short timeframe.

Summary

The seismic industry will adopt a diverse range of AI solutions to a diverse range of seismic problems in coming years; both to augment better decision making, and to significantly accelerate the cycle time of seismic projects. Computationally-demanding processes such as velocity model building and seismic imaging are obvious targets for the development of innovative ways to do things much faster. Using the example here of velocity model building, although neural network-based alternatives to full waveform inversion (FWI) have been most popular in the literature, it can also be demonstrated that stochastic modeling methods using highly efficient reflection tomography can deliver accurate results up to two orders of magnitude faster. What is clear is that this arena is in its infancy, and pragmatic implementations will more likely move success from inflated expectations to demonstrable success.

Contact a PGS expert

If you have questions related to our business please send us an email.