First Break June 2020 'Seismic processing parameter mining – the past may be the key to the present'.

In a seismic processing project, the parameters used by each geophysical algorithm are tested. Combinations of parameters are checked, and semi-subjective decisions are made about the optimal values. For some steps, there may be numerous parameters to test. Assuming an algorithm had 10 different parameters each with three settings, say low, medium and high, there would be ~60 000 possible combinations to test. Add another setting, and the combinations exceed 1 000 000. An experienced Geophysicist will fine-tune the starting point based on their experience, so we never have this many tests.

The statistics in this example have not accounted for the dynamic nature of the data, as it varies in all dimensions. The optimal parameters for one window of data might not be for another, although they may be very close to it. If we want the best results everywhere, then for a typical ‘windowed’ process using 10 parameters and three settings, on a data set of 5 000 sq. km, the chance of being right everywhere is less than one trillionth of one percent. There is always a parameter null space, but the example does raise an interesting question of what is a good set of parameters, and what is not. This is particularly important if the seismic data that has never been processed.

What is the maximum time saving that automation can deliver for a seismic processing project of 3 000 sq. km (this would normally take 9.5 months), 10, 12 or 24 weeks?

10 weeks? Learn what happens when data mining and conventional velocity model building is implemented.

12 weeks? Learn what happens when conventional signal processing and automated model building is implemented.

24 weeks? Learn what happens when data mining and automated model building is implemented.

Is Any Image Degragation Worth the Time Saved?

The goal of the work was to understand the quality and turnaround impact of replacing the testing of seismic processing parameters by those mined from a collectivized digitalized experience database. The database contains the parameters used for key steps from previous processing projects. The parameter mining extracts trends based on key criteria. All required parameters are determined in advance of the project, and used to populate workflows run back-to-back. The trial used a subset of seismic data from Sabah in Malaysia, and compared the results to a full-integrity processing project on the same data.

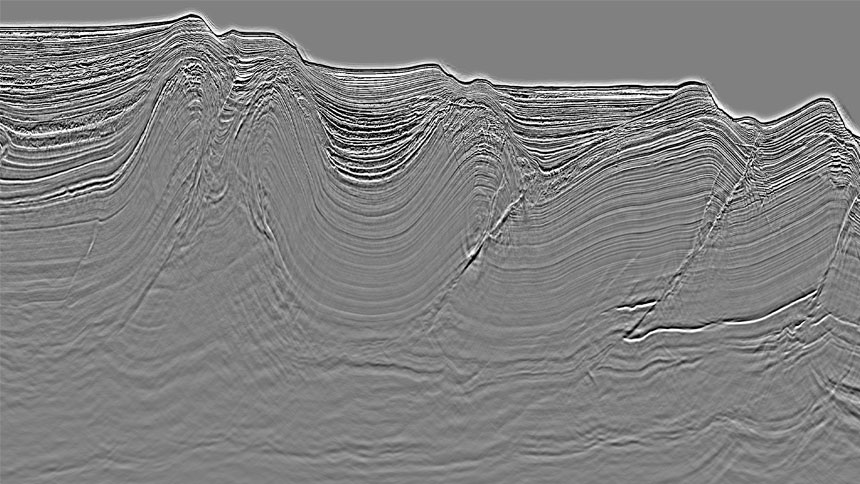

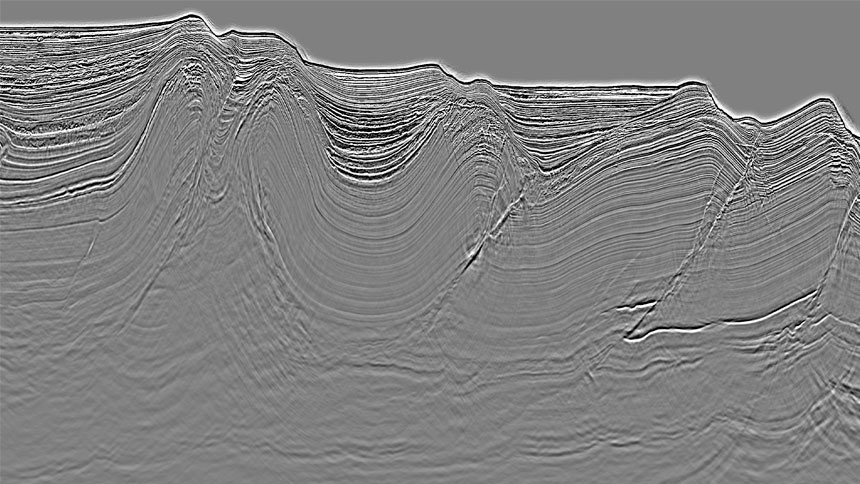

The team created quantitative metrics of quality, and similarity between the two volumes. Both the raw seismic data sets and metrics showed a striking similarity. The test was run up to and including the migration step, for which we relied on the velocity model from the full-integrity project. In the image below the initial question is to see if you can spot the differences between the two volumes.

The figure shows stacked data, which is often a leveller of quality. Despite this, there is almost no difference between the two data sets; however, by bypassing the parameter testing, there was a significant reduction in turnaround.

The proof-of-concept project described in June’s First Break paper outlines an approach using crude data mining, maintaining data quality and reducing project turnaround times. There are some caveats, but using the collectivized experience of all PGS staff is an undoubtedly powerful tool to harness. It may help reduce testing time, or deliver high-quality fast-track products.

For more information on tailoring your workflow contact imaging.info@pgs.com.

Contact a PGS expert

If you have a question related to our Imaging & Characterization services or would like to request a quotation, please get in touch.